Hello TEC community,

I have been playing with the Hatch dashboard and analyzing the results from different parameterizations. I noticed in these experiments that the distribution of Impact Hours seems more skewed than I would have expected, and it made me realize that we had likely never questioned the outputs of the data from the praise process.

Although I recognize that people receiving pay from the organization only received 15% of the tokens relative to unpaid contributors, the data seems to suggest that some forms of work in the TEC were more recognized by the praise process than others, and I thought this fact deserved a conversation involving the community.

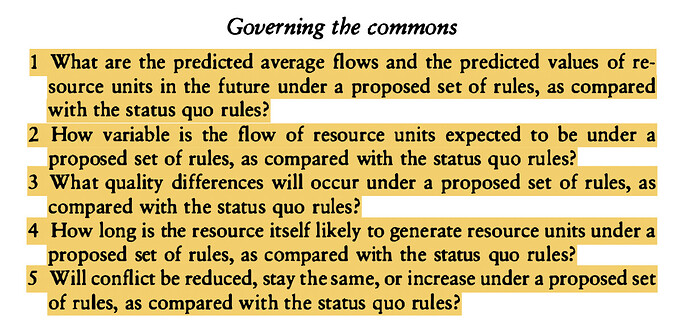

Looking at the praise data, it seems that a lot of small praises accumulate significantly more tokens than larger tasks with less frequent praise, even if they are quantified relatively much higher. This begs the question: do the results we see land within what we would call a fair or accurate distribution of tokens for work that was put into the efforts of the TEC?

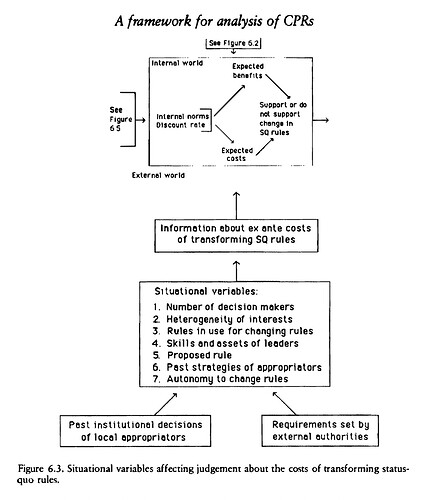

Considering that significant governance in the TEC will be held according to these IH distributions (or funds available via RageQuitting), I believe this poses considerations for community discussion prior to the Hatch.

I don’t mean this as an offense to anyone who received more tokens than anyone else - in fact, I think Rawl’s Veil of Ignorance should be invoked here to some degree, and we separate the desire to maximize for any individual’s best outcome to focus on ensuring outcomes that are fairest to all.

We have established the Praise process to track and quantify contributions, but afaik we haven’t taken a step back to do any quality assurance on whether those processes are outputting signals that we are comfortable with as a community. When using new processes like the praise system to quantify contributions to an ecosystem, we are measuring with Wittgenstein’s Ruler. In other words, we first need to measure the ruler itself to know if it’s good at measuring anything else.

I have put some overall thoughts on improving the praise process into this document, and I believe we should do some preliminary data analysis of the distribution of Impact Hours to learn more, as well as discuss as a community whether there is wider consensus as to whether this is an issue, and how we can address and mitigate it.

A few items to consider in this proposal:

- Engage the skills of a few data scientists to understand the distribution of IH tokens more thoroughly & whether there are alternative weightings of the data that feel more accurate to the community

- Discuss as a community what a “fair” or “more accurate” distribution of tokens looks & feels like, understanding that this may be fuzzy & vary widely by perspective

- If determined necessary, consider various ways to adjust the distribution to be more accurate to how we feel it should be distributed

- Create a new mandate for praise-givers - dish widely in the TE ecosystem. We want to get IH & TEC tokens to Token Engineers too! Learn what’s going on and help the system recognize it’s value. Many TEs are too busy doing the work to be present in TEC meetings dishing praise for that work.

I hope these thoughts and recommendations can inspire community conversation around this important topic - comments, disagreement, and further discussion welcome!

Big love,

Jeff

), and I don’t have a big comprehension of what could go wrong with acknowledging contributions. I will give my opinion on what we could do and what we shouldn’t do to improve the system and the valuations.

), and I don’t have a big comprehension of what could go wrong with acknowledging contributions. I will give my opinion on what we could do and what we shouldn’t do to improve the system and the valuations. :

:

Let’s open up this Praise System algorithm and see what’s inside!

Let’s open up this Praise System algorithm and see what’s inside!

and exercise our democratic muscles as a community

and exercise our democratic muscles as a community

, still plenty of room to grow and that excites me)

, still plenty of room to grow and that excites me) Look forward to seeing you there!

Look forward to seeing you there!