Proposal Title : Reward System moving forward

-

Who is going to be affected by this proposal?

The community in general, but specially the Stewards who have been dedicating so much to the Praise process -

Who are the experts of the proposed subject?

@Griff @octopus @ygg_anderson @mateodaza @Vyvy-vi @akrtws

Feel free to tag others that might also have a lot to say about this proposal  and a HUGE thank you to @octopus who helped so much and offered such great solutions to improve praise and implement frequent analysis and to @ygg_anderson who offered the Labs space to develop this implementation!

and a HUGE thank you to @octopus who helped so much and offered such great solutions to improve praise and implement frequent analysis and to @ygg_anderson who offered the Labs space to develop this implementation!

Description:

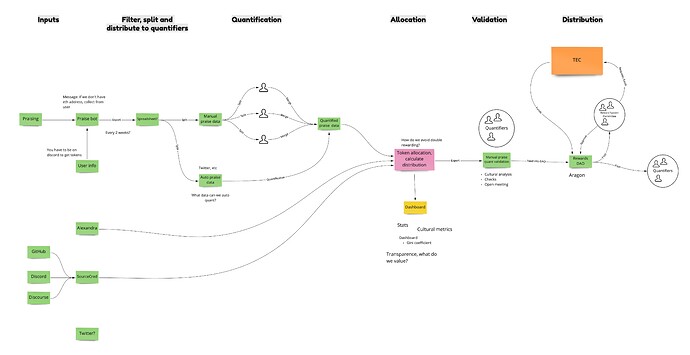

The Praise data analysis that happened a couple of months ago showed us many routes for improving Praise. The quantification and analysis processes were two main points that needed improvement, and the interoperability with SourceCred is a desired addition that has been discussed for a while. This proposal is a first step to move forward, considering that other more complex improvements can be proposed in the future when the results from the Reward System’s research group from the Governauts will be available.

Problem

-

Quantification process is time consuming

-

Only a few people were volunteering to do it

-

Data set was dirty and the data analysis was looking into one year of data.

-

Praise and SourceCred are rewarding similar things

Proposed solution

- Quantification process being partially asynchronous

- Have one short meeting to chat about questions and reflections of the async process

- Having a brief report, analysis and distribution every 2 weeks

- Distribute it via Reward DAO (the one we’ve been using for SourceCred) in TEC tokens

- Limit Praise to tasks that aren’t rewarded by SourceCred

- Implement Alexandra - the bot that records the time people spent in a discord call, developed by Johann Saurez from LTF

Proposal Details

Steps required for Praise:

-

Take the csv file of all praises (which is already being generated)

-

Load the csv file in Python

-

Write to new csv files or separate sheets in an Excel file

-

Automatically push to GitHub or post on Google Docs

-

Create a list that randomly assigns sheets to a set of judges (to minimize correlation of similar judges who think alike)

- The same sheet would be handed to 2 or 3 people so we continue having the average as the final result, instead of just one person deciding the value of one praise.

-

Pull the finished sheets back into a single document

-

Auto generate Gini Coefficient, Histograms, or the metrics we find useful.

-

Have a call to discuss the analysis and the quantification process - This will bring us cultural insights and help us find problems and strengths in early stages.

-

Write a brief report to add transparency. (it can be the notes of this call being published)

-

No deductions will be made in the final data like we had for paid contributors.

This model will distribute the work among multiple people and minimize the time each person spends on quantification. It means we’ll need 10 to 15 people to be quantifiers. This is a role that can be compensated by the Commons.

The compensation amount should be discussed, but it can be part of the Reward Proposal that needs to be templated to be sent to the DAO on a frequent basis - maybe every 2 or 3 months.

This can be a role taken by people who want to get more involved in the community since praise is a good informative of what happens and what is valued. We should aim for cultural diversity in the group of quantifiers, as well as experience diversity, some older members mixed with newer ones.

Interoperability with SourceCred and Alexandra

- SourceCred is currently capturing Github and Discourse contributions.

- Praise captures a multitude of subjective and objective contributions.

- Alexandra hasn’t been implemented yet, but it captures time spent in calls.

Integrating these 3 tools should mean that the scope of Praise is reduced. We’ll need cultural guidelines and training to get there, but it will be incredibly valuable for us, reducing even more the admin time spent in quantifications, and shaping Praise to be an even better tool for subjective contributions.

Rewards Distribution

I propose we use the Reward DAO instance we’ve been using for SourceCred to send praise and SourceCred rewards to all the contributors.

The DAO will only have these 2 functions plus sending compensation to the Quantifiers. It will be managed by the SourceCred Committee, which will become the Reward System Committee.

- Every 2 or 3 months a proposal is submitted to the TEC by a committee member requesting funds to the Reward DAO

- It’s interesting we use TEC tokens to empower our economy, so a designated member of the committee would swap wxDAI for TEC tokens

- Every 2 weeks, after the quantification, rewards are sent in TEC tokens to the contributors from the Reward DAO

What value will this provide for the TE community, commons and ecosystem?*

-

A continuous stream of funding for all TEC collaborators

-

Quantifiers could work on their own schedule, as long as they were finished by a predetermined time.

-

There would be less social influence of Quantifiers on each other and no “doctoring” of numbers possible,

-

Analysis would be instant and frequent.

-

Data driven cultural insights will be constantly available.

-

Tool interoperability will be tested

-

Rewards will bring movement to the TEC token economy and people contributing work will have a governance voice as well.

Expected duration or delivery date

6 weeks optimistically

Team Information

SOFT GOV wg, LABS wg, LTF team support

) They’re gold. And from what I read it was not uncommon for them to be undervalued.

) They’re gold. And from what I read it was not uncommon for them to be undervalued.  Can’t speak to thier desires but I would value thier input.

Can’t speak to thier desires but I would value thier input. , wchargin

, wchargin