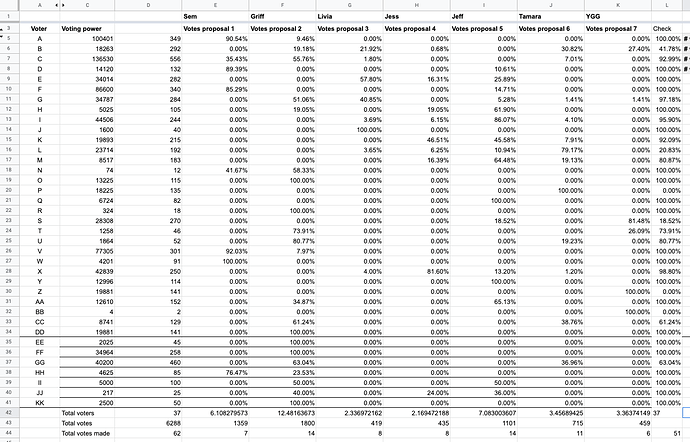

Right now, no proposal has a majority of votes. not even close… The top voted proposal has ~40%, and the results have flipped at some point the Praisemageddon proposal was in the lead in the last few hours, tho the No Aliens proposal has come back and taken a large-ish lead.

This has been a horrible horrible experience for our community. I regret that we even debated this at all. I really do. The repercussions of this debate are going to hurt no matter what the results are.

We have an extremely polarized community right now. This shit is like democrats vs republicans on twitter, it’s gross.

That said, we have to accept that we are where we are and think about what is best for the Commons.

The rules set forth for this vote say what we should do in the case of a polarized vote:

From Pre-Hatch Impact Hours Distribution Analysis - #50 by liviade

—Quote—

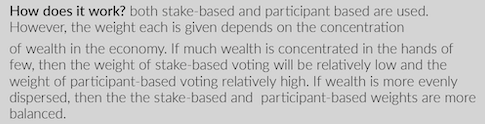

What if there is a polarized scenario, where 2 different proposals have an evenly split majority of votes in the run-off?

(By evenly split, we consider a difference of less than 10 votes between first and second place.)

In this case, the top 2 authors will be invited to a hack session to merge their proposals together and commit to a collaborative result.

—End Quote—

I think it was a mistake to define this so specifically as 10 votes… and that the spirit of the idea is that we should accept a polarized result (Praise Juanka for the forethought here).

I would like to propose that we follow the spirit of these rules and that Jeff and I hack out a final solution that is a combination of both proposals. We have a lot of different opinions on a lot of things, but above everything else, we love each other and this community and will find a solution that we can be proud of.

What I would propose to start is that ~50% impact hours from  is counted as people’s IH and ~50% impact hours from

is counted as people’s IH and ~50% impact hours from

s counted as people’s IH and the 2 amounts get added together.

s counted as people’s IH and the 2 amounts get added together.

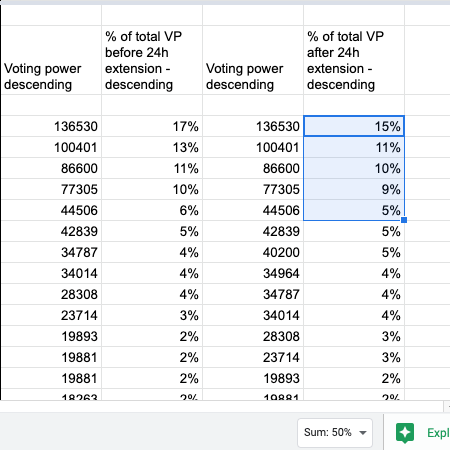

The 50% wouldn’t be 50% but would be the % of votes that each proposal got.

This allows everyone to win a little bit, and everyone’s votes to be counted which is just the best that we can do right now. Instead of a clearly defined set of winners and losers, we can have all the voters for these 2 proposals represented in the final outcome.

Honestly, this whole time I have been watching this as a train wreck waiting to happen, i never saw a way to push the car off the track to avoid the destruction ahead…

Amazingly, I think that with a proposal like this we can actually “Split the baby in half” and it will work out. (google that phrase if you don’t know what i’m talking about)

I have had a lot of anxiety about this, and when Livia brainstormed with me and this idea popped out, it was the first time in weeks that I felt hope around this situation. That we could actually have a solution that doesn’t completely just disregard a large swath of our community.

I’m not happy about an intervention at all, I never thought it was a good idea, we should have clearly laid out the rules in advance if we wanted to do that and then stuck with them IMO it’s too late to change the rules and decide to take from some to give to others. But that is not everyone’s opinion and I really love that we have a community of diverse opinions.

Looking forward, COMPROMISE is a value that will hold us together better than accepting the vile, false dichotomies of democrat vs republican, socialist vs libertarian, Good vs bad, right vs wrong.

There is no “good” here, there is no “right” here. There is only best…

Compromise is something I think we as a community can be proud of, and it is at the very least in the spirit of the rules as set forth for this process, if not explicitly defined as the solution for a polarized outcome.

The Runoff is live!!

The Runoff is live!!

s counted as people’s IH and the 2 amounts get added together.

s counted as people’s IH and the 2 amounts get added together.