This.

(from the same link as before HackMD - Collaborative Markdown Knowledge Base but to make it easier to read on the forum, pasted below!)

The end of the first round of voting

Updated the xls with the final results of the first round of votes. The runoff votes are now happening, go here and vote! The vote ends Tuesday, July 6, at 8pm CET.

Of interest to me here is that:

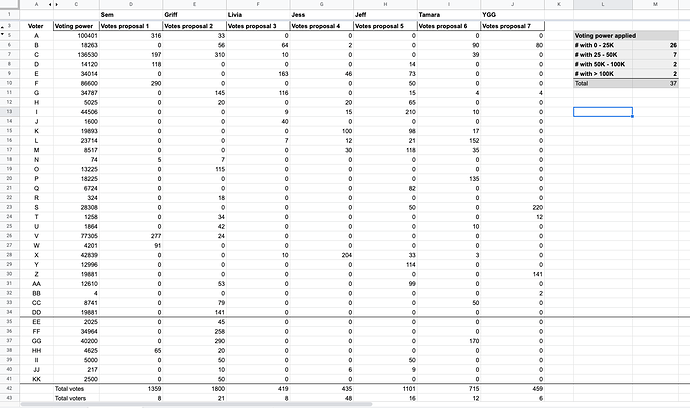

- If not for the 24 hour extension of the vote (noted by the black line between voter DD and EE), the decision would have been made by, essentially, 4 of the 30 voters.

- The additional 7 voters in that 24 hour period applied 71% of their quadratic votes (723 of 1023) to proposal 2, which swung the vote in that direction.

- What lessons are there to be learned here for the upcoming runoff vote and for voting process design in general?

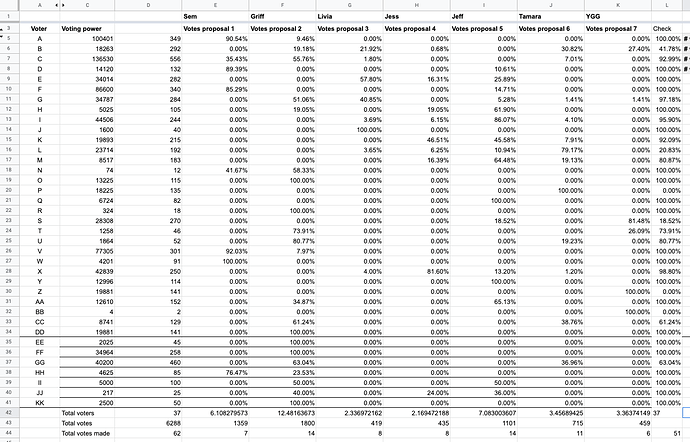

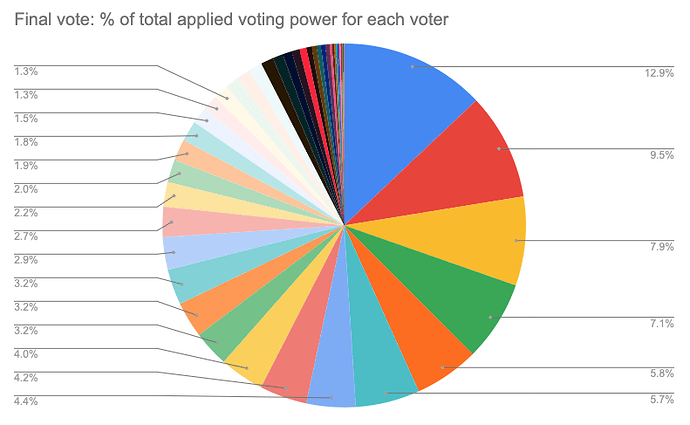

Griff added a tab to do show a recombintion of percentage of voting power applied.

So if:

- Voter B applied 60% of her voting power to proposal 3 and

- Voter H applied 40% of her voting power to proposal 3

Then:

- Proposal 3 would have a combined 100% of a voter-unit.

This is super interesting! It’s not exactly 1-person, 1-vote but it does ingeniously try to simulate that idea. Maybe it’s more like 1-recombined-person, 1-vote .

This is what those results look like:

The Runoff is live!! Tokenlog · Token-weighted backlogs

The Runoff is live!! Tokenlog · Token-weighted backlogs

Yesterday Jeff, Griff, Tamara, Jess, Juanka, Zeptimus and myself had a session to prepare the runoff proposals and its details. The top 4 went live and considering the community feedback, we decided to extend the closing of the votes to Thursday, July 8th

No more proposals will be submitted in this round and we’ll be hosting debates this week to discuss them. Feel free to host a debate as well by adding it to the TE calendar!

Thanks to everyone who has been involved in this conversation. Please help us promote this voting round!

Thanks for your continued analysis @Tamara! I think this is a fantastic learning experience for the TEC community to get a tangible feel for various kinds of voting: 1P1V, 1T1V, quadratic voting, and how all of these tools give us different signals to interpret outcomes in the context of the defined decision space.

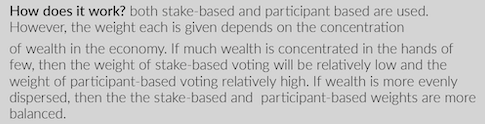

I wanted to point out another vote weighting tool called Democonomy (introduced by the Saga project), in case it might be useful here (or in the future) as well. It dynamically weights decision making between whales and minnows according to the gini coefficient of the ecosystem. They also produced a fantastic infographic that walks you through how this plays out in several scenarios.

The infographic is a great way to discuss and demonstrate these various voting optimization tools as well, perhaps something we can emulate! Learnings on every side.

For more information on Democonomy voting: Resolving the Stake-Based vs. Participant-Based Voting Dilemma

Very interesting. I’d love to know how it is working out applied there. This really stands out:

I added some pictures today.

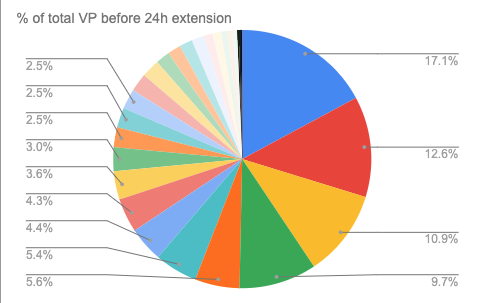

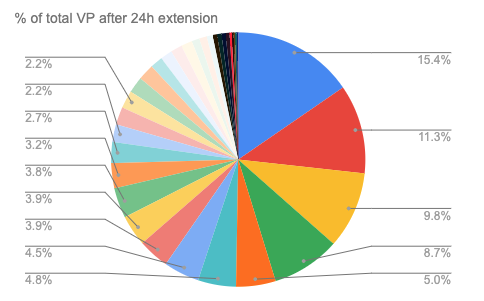

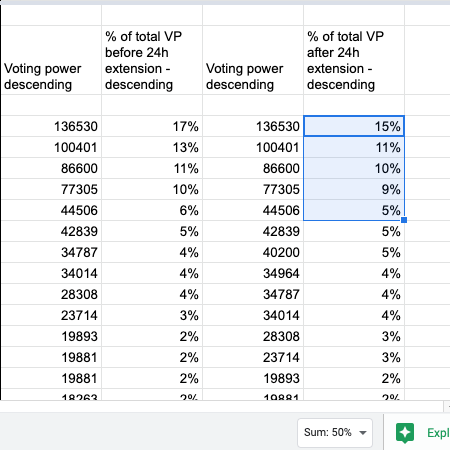

Voting power breakdown

The data we have here is empirical. It accurately shows what actually did happen based on our employment of quadratic voting.

The data shows how many unique addresses participated, how much voting power they used and where they applied that voting power. That’s all.

Here is what stood out about the results to me:

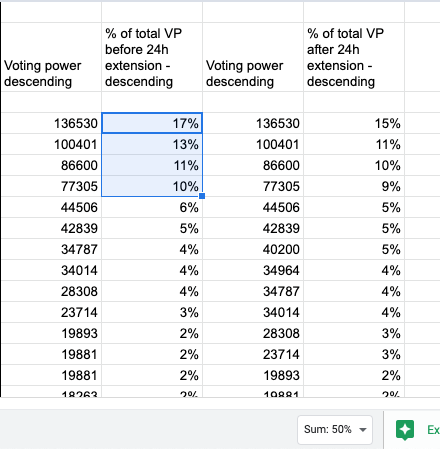

- Before an emergency 24 hour extension was granted:

- The top 4 out of 30 voters (13%) accounted for 50% of the entire voting power used.

- After the 24 hour extension:

- The top 5 out of 37 voters (14%) accounted for 50% of the entire voting power used.

- Are these the results we expected?

- Is this what we want?

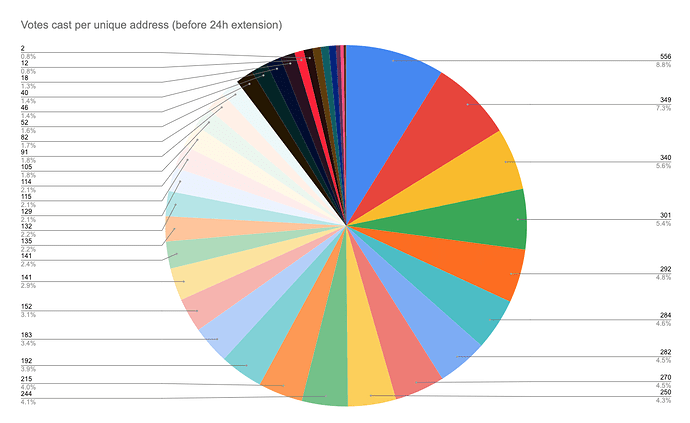

Before the 24 hour extension:

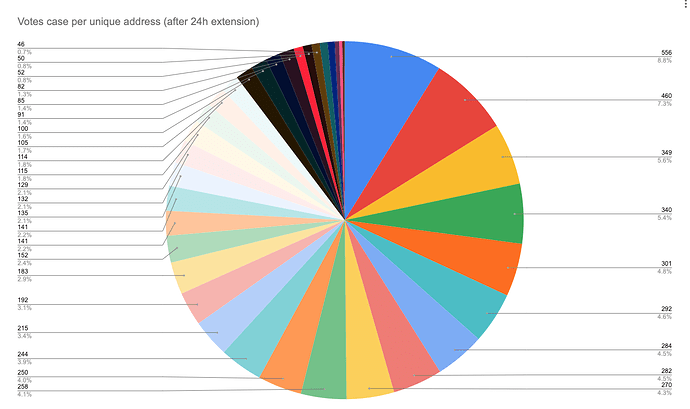

After the 24 hour extension:

Choosing the runoff proposals

In the end, the final runoff proposals were determined based on Griff’s calculations of applied voting power. That is the simulation of one-person one-vote in one of the xls tabs.

Using that method there is only one change in rank: Jeff’s praisemageddon comes in second, ahead of Sem’s no-intervention. The rest appear to be ranked the same order as the quadratic voting results.

The Saga project closed it’s doors earlier this year. I will ask them if they have any data on how this voting optimization tool worked for them.

In terms of voting optimizations (quadratic vs 1P1V vs democonomy), I wanted to share some feedback on the IH process so far from @mzargham: the power law distribution of IH is exponential, not polynomial, so maybe you need LOG voting not square root. I wonder if this is an addition to Tokenlog that could prove useful in this and/or future votes with exponential (i.e. highly inequitable) token distributions.

In order to help participants remain neutral in the discussion and realign this debate with Rawl’s Veil of Ignorance, he also recommended a fantastic mechanism to help large holders separate the outcomes of this discussion from its impact on their personal holdings: what if we decided that there was going to be a lottery and everyone was going to have their IH swapped (or averaged) with the person they drew at random? I think this is a fantastic thought experiment that may sway some whales from voting solely for outcomes that preserve their outlandish amounts of IH.

All in all, I think this is a fantastic learning experience for the whole TEC, to have a example data set to run through these various optimization functions and gauge what we feel is fair and accurate, in line with the needs and values of the community. Much better than blindly “trusting the algorithm” that what comes out is truth. Do we want to allow a handful of whales to determine the direction of the TEC, now and moving forward? Making use of a future collective intelligence toolkit demands that we be critical and thorough with our analysis and use of these tools. Thanks again for all your hard work in pulling and analyzing this data @Tamara!

Respectfully, I respect clarification on this. Power-law distributions and exponential distributions are completely different things. What aspect of this distribution is leading to the claim of “exponential”?

Power-law distributions have a roughly linear relationship between log(x) vs. log(P(x)), where exponential distributions have roughly linear relationships between log(P(x)). If this were an exponential distribution, we would expect roughly the same % increase along similar scales. Do we see that?

It’s a technical but important question to iron out, since we’ve been working on the assumption of this being a Pareto power law distribution. I’m open to being convinced that this is better fit by an exponential distribution, but it’s a strong claim that needs evidence. It would be unusual to see human work or resources following an exponential distribution, and would indicate that Impact Hour distribution was really abnormal in some interesting way.

Remember, the IH’s only represent 10-25% of the TEC Hatch tokens (most likely around 20%), and the rest of the Hatch Tokens will be held by members of the Trusted Seed that send wxDai into the Hatch. Then both groups will be diluted by the Minting of tokens from the Augmented Bonding Curve.

Is voting power relevant?

Quadratic voting specifically addresses the issues around voting power distribution, only looking at voting power is ignoring the quadratic voting factor and I would argue creates irrelevant statistics.

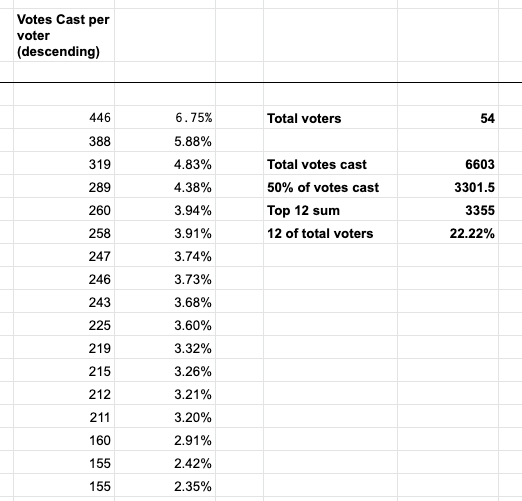

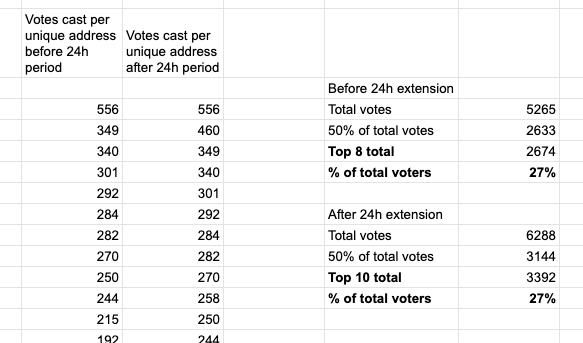

We count votes, not voting power, so we should look at votes when analyzing the data.

I didn’t make the charts, but i added the vote math to the spread sheet and it took 9 voters before the extension and 10 voters after to have a majority.

-

- The top 9 out of 30 voters (30%) accounted for 50% of the entire votes counted.

- After the 24 hour extension:

- The top 10 out of 37 voters (27%) accounted for 50% of the entire votes counted.

These are pretty good results, skin in the game should be included in the dynamics. IMO the comparison between voting power and the votes that actually count shows why we chose to use tokenlog and quadratic voting.

Thank you for asking this, @Griff

“Is voting power relevant?” is a good question to challenge our most basic assumptions. My understanding is the correlation with votes-that-can-be-cast makes it relevant in this discussion but agree we should also look at the actual votes cast.

I come to a diff result for the first part (see below) but the same for the second.

I believe the following is a true. Is there a mistake I don’t see? Based on the tokenlog data of the primary voting period:

- it would require 10 of 37 voters, 27% of voters, alone to determine the winner.

- If all other 27 voters combined, 73% of voters, voted for a single but different option, they would not have enough votes to pass it.

Before the 24h extension: 8 out of 30 voters (27%) cast 50% of the total votes.

After the 24h extension: 10 out of 37 voters (27%) cast 50% of the total votes.

And here is what that looks like visually:

I have created an intervention proposal that address all of the concerns raised by the community. It can be considered an implementation reference of  PRAISEMAGEDDON

PRAISEMAGEDDON

Right now, no proposal has a majority of votes. not even close… The top voted proposal has ~40%, and the results have flipped at some point the Praisemageddon proposal was in the lead in the last few hours, tho the No Aliens proposal has come back and taken a large-ish lead.

This has been a horrible horrible experience for our community. I regret that we even debated this at all. I really do. The repercussions of this debate are going to hurt no matter what the results are.

We have an extremely polarized community right now. This shit is like democrats vs republicans on twitter, it’s gross.

That said, we have to accept that we are where we are and think about what is best for the Commons.

The rules set forth for this vote say what we should do in the case of a polarized vote:

From Pre-Hatch Impact Hours Distribution Analysis - #50 by liviade

—Quote—

What if there is a polarized scenario, where 2 different proposals have an evenly split majority of votes in the run-off?

(By evenly split, we consider a difference of less than 10 votes between first and second place.)

In this case, the top 2 authors will be invited to a hack session to merge their proposals together and commit to a collaborative result.

—End Quote—

I think it was a mistake to define this so specifically as 10 votes… and that the spirit of the idea is that we should accept a polarized result (Praise Juanka for the forethought here).

I would like to propose that we follow the spirit of these rules and that Jeff and I hack out a final solution that is a combination of both proposals. We have a lot of different opinions on a lot of things, but above everything else, we love each other and this community and will find a solution that we can be proud of.

What I would propose to start is that ~50% impact hours from  is counted as people’s IH and ~50% impact hours from

is counted as people’s IH and ~50% impact hours from

s counted as people’s IH and the 2 amounts get added together.

s counted as people’s IH and the 2 amounts get added together.

The 50% wouldn’t be 50% but would be the % of votes that each proposal got.

This allows everyone to win a little bit, and everyone’s votes to be counted which is just the best that we can do right now. Instead of a clearly defined set of winners and losers, we can have all the voters for these 2 proposals represented in the final outcome.

Honestly, this whole time I have been watching this as a train wreck waiting to happen, i never saw a way to push the car off the track to avoid the destruction ahead…

Amazingly, I think that with a proposal like this we can actually “Split the baby in half” and it will work out. (google that phrase if you don’t know what i’m talking about)

I have had a lot of anxiety about this, and when Livia brainstormed with me and this idea popped out, it was the first time in weeks that I felt hope around this situation. That we could actually have a solution that doesn’t completely just disregard a large swath of our community.

I’m not happy about an intervention at all, I never thought it was a good idea, we should have clearly laid out the rules in advance if we wanted to do that and then stuck with them IMO it’s too late to change the rules and decide to take from some to give to others. But that is not everyone’s opinion and I really love that we have a community of diverse opinions.

Looking forward, COMPROMISE is a value that will hold us together better than accepting the vile, false dichotomies of democrat vs republican, socialist vs libertarian, Good vs bad, right vs wrong.

There is no “good” here, there is no “right” here. There is only best…

Compromise is something I think we as a community can be proud of, and it is at the very least in the spirit of the rules as set forth for this process, if not explicitly defined as the solution for a polarized outcome.

I think it was a mistake to define this so specifically as 10 votes… and that the spirit of the idea is that we should accept a polarized result (Praise Juanka for the forethought here).

Agree on this.

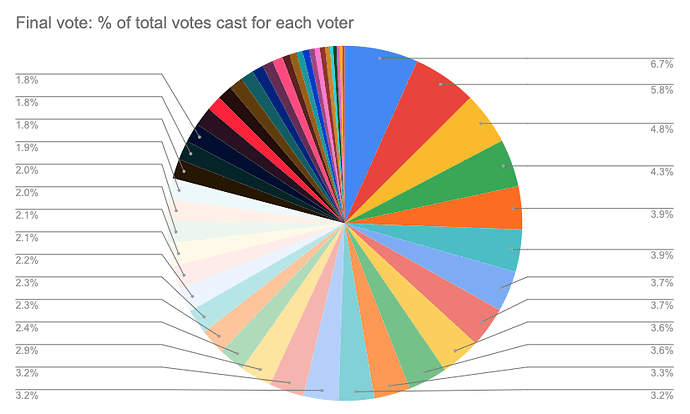

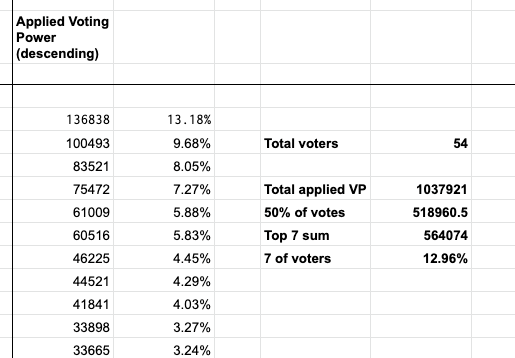

For completion, here is how the final result breaks down.

Link to the Transparency of IH Voting.

Final vote

- There were 54 voters

- 7 of the 54 voters (13%) applied over 50% of voting power

- 12 of 54 voters (22%) cast over 50% of the votes.

I want to address three separate things in one post

First, I wish I had waited to reply to the post by @JeffEmmett about the ideas of log voting and randomization. In rereading my response, I don’t think it fits the spirit of this community, and comes across as much more combative than intended. Precision over terminology can come later in the process, if it even matters at all – we can discuss the ideas on their merits in a way that is accessible to all without getting bogged down in technicalities. Let’s avoid the Green Lumber Fallacy

Second, I want to commend both @JeffEmmett and @Griff for their conciliatory energy in the debate earlier this week. This community is really something special, and I think that the way this discussion proceeded is actually a net positive for its growth. I want to thank everyone who met with me and helped me grow as a data analyst through this process.

Finally, on quadratic voting. It seems we are considering various alternatives, and I wanted to point to the pairwise modifications used by GitCoin. I believe the version currently being used by TokenLog is just sum(sqrt(x)) (where x is a list of the quantities of votes for a proposal). The GitCoin version is square(sum(sqrt(x))) - sum(x) – this subtracts off the original voting power, so if only one person votes for a proposal, that proposal receives 0 votes. There is a blog post by Vitalik Buterin with a nice geometric illustration: Pairwise coordination subsidies: a new quadratic funding design - Economics - Ethereum Research

The article also proposes a “pairwise coordination subsidy” which is a second potential modification – this essentially punishes the formation of coalitions that only vote for one issue. These ideas are already established in a related community, and they might be worth throwing in the “Voting Ideas” hat.

I love that the last intervention call became so emotional. I love being part of a community that has the courage to approach these difficult questions. Let’s use this opportunity as a learning and growing opportunity! Thank you all! ![]()

![]()

![]()

I didn’t take the chance to voice my thoughts at the end of the call. So, here are my 5 cents.

A lot of focus within the DAO space has been on voting. But the way votes are constructed often leads to polarization. And the more at stake, the greater the polarization. It is tempting, like we have done, to do voting rounds where in the final round it stands (mostly) between two finalists. Choose A or B, democrat or republican. First primaries, then presidential election. Polarization is inevitable in this scenario.

How can we avoid that? Maybe the suggestion by @Griff is the way to go from now on? From now on all votes end in compromise.

We want the final decision to represent the opinion of, lets say, 75% of the community. Maybe the top five proposals make up 75% of the vote. Then those five proposal authors have to sit down and come up with a final decision where the intentions of each proposal is weighted against the others.

How does that process look? Probably not look like a hard negotiation but more like a “warm data lab”, as described by Nora Bateson. That for one means the process would need to be facilitated by some outside neutral person.

Imagine a presidential election where the two top most candidates would together get the task of coming up with a third person to step in as president. Compromise!

Would it be interesting to explore this process, formalize it and describe it?

ps. Another way would have been not do this kind of voting at all. I think it can be valuable to explore conviction voting as a tool for signalling and deciding outcomes for these kinds of issues as well. How do we move from a mindset of coming up with better voting tools to one of coming up with better proposals?

The Impact Hour analysis and voting process are over and here is a summary of what happened and how we are moving forward:

The TEC has chosen to use the Trusted Seed Swiss Association as its legal strategy to offer a shield to Hatch participants who will be bootstrapping the TEC’s economy. Contributors who have received Impact Hours through praise but haven’t yet activated its membership won’t receive IH tokens.

During the last 2 months, all the praise dished in the TEC were analyzed. We gathered the issues raised into a problem set that served as the template for IH intervention proposals on Tokenlog.

-

Does this proposal address that some categories may be under rewarded and others over rewarded?

-

Does this proposal address the fact that paid contributors have had a 50-85% reduction to their total number of impact hours?

-

Does this proposal address that foundational members of the Token Engineering Community may lack recognition for their less visible work?

-

Does this proposal address the distribution of impact hours in relation to equality metrics such as the Gini Coefficient?

9 proposals were submitted and the 4 top voted ones made it to the runoff.

- The top 2 proposals of the runoff were “No Abnormal Intervention” and “Praisemagedon”

- No Abnormal Intervention addressed problems 2 and 3. #2 - It suggested an infinite vesting for paid contributors, so they would still have a financial deduction but not a governance deduction for most of their IH. #3 - Proposed a Praise party for Token Engineers to ensure they were well rewarded.

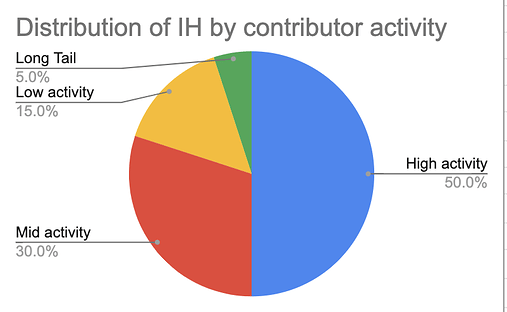

- Praisemagedon addressed the 4 problems by suggesting reshaping the distribution, dividing contributors into activity buckets of high, medium, low and longtail. It also suggested an adjustment for paid contributors.

The community reaction to these 2 proposals was very polarized: No Abnormal Intervention had 2590 votes and Praisemagedon had 2389 votes while 3rd and 4th places had 1292 votes combined.

60 unique addresses participated in the full voting cycle including the primaries and a total of 12920 votes were cast.

A scenario that would make half of the community unsatisfied if either of the top proposals won didn’t sound right. We are a value aligned community that has been working incredibly well together for the past year. This was the first polarized situation we have faced, and the first opportunity to choose compromise.

Griff and Jeff, the authors of the top two proposals, agreed to hack on the best solution to integrate them both. What does this look like?

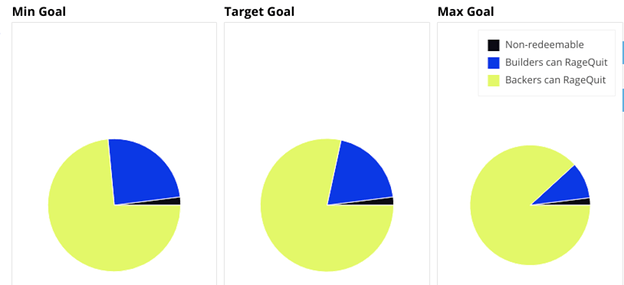

For context, the winning Hatch Params proposal #83 has set a percentage of the total token supply to be allocated to builders. 25% at the min goal, 20% at target goal and 10% at the max goal. The total number of Impact Hours being distributed is 9731.413.

Proportionally to the number of votes of the runoff, No Abnormal Intervention will have an impact over 52% of the IH and Praisemagedon over 48% of the IH. So if we reach the max goal for example, 52% of those 10% will be impacted by No Abnormal Intervention, and 48% of those 10% will be impacted by Praisemagedon.

How is each proposal being implemented?

No Abnormal intervention:

No Abnormal intervention:

- It maintains the original IH distribution to 52% of the total IH.

- The last praise quantification had a larger distribution than normal with a bonus for Token Engineers who were praised in the “TE praise party”

- A proposal to implement infinite vesting to paid contributors might be submitted to the Commons after the upgrade.

- An audit was made to adjust paid contributors’ rates and fix errors from previous quantifications, maintaining the policies used in the process so far.

Praisemagedon:

Praisemagedon:

-

Contributors were divided into 4 buckets based on their activity.

-

The buckets will correspond to Praisemagedon’s 48% distribution.

-

We have 29 contributors in the high bucket that will receive 50% of the distribution, 46 contributors in the mid bucket that will receive 30%, 115 contributors in the low bucket that will receive 15% and 193 contributors in the longtail bucket that will get 5% of the distribution.

-

To prevent a sharp drop between one bucket and another and aim for a gradually round-out distribution, a curve will be applied according to the total IH each contributor would receive if adjustments haven’t happened. Eg.: 2 people are in the mid bucket, one with many IH, other with less IH. The one with more IH will be on the top of the mid bucket, while the one with less will be in the bottom, closer to the low bucket.

-

After the bucket distribution is ready, another augmentation will be applied where paid contributors will get deductions according to how many times they received compensation.

This post will continue to be updated in the next couple of days when everything is ready and the lists with the IH results can be shared in their last version for the Hatch.

Thanks to everyone who is participating in this process

I have been predicting this since the inception of the praise system. It “works” but in a way that replicates many of the problems with the centralized banking system. Not ALL of them, but enough to make it distasteful in some ways.

Quantifying qualitative contribution is always dirty… UBI and a culture of giving (burning man style) are better solutions than this… they just avoid the quantification all together and just reward you for being there.

But I think here we have to remember what the problem is we are trying to solve and evolve to better solve it:

Problem: How do we we reward TEC contributors with sweat equity in the TEC.

Pre-Hatch Solution: Quantify Praise to catch the qualitative work and the quantitative work

Next evolution of the solution seems to be: Quantify Praise to catch the qualitative work and use Sourcecred to distribute value to the quantifiable work.

To reward the qualitative value people are producing, we must acknowlege it (praise does this pretty well!) and then we have to measure it… which is the really hard part.

Update: The vote to restore 75% of governance rights to those who had had theirs deducted will be voted on from Sept 24 -27. There will be a snapshot vote and this is the forum post with the latest information: 75% Governance Giveback - #17 by Juankbell